Introduction

DNS (Domain Name System) records are the backbone of internet connectivity for web servers, mail servers, and other networked systems. Missing or misconfigured DNS records can lead to service disruptions, causing websites to become unreachable or emails to fail. PowerShell offers a robust cmdlet, Resolve-DnsName, that enables administrators to monitor, validate, and troubleshoot DNS configurations (PowerShell DNS record monitoring).

This article introduces a PowerShell script designed to simplify the process of checking DNS resolution for multiple domains. By leveraging this script, you can automate DNS checks, verify propagation of updates, and ensure compliance with best practices for security and network reliability.

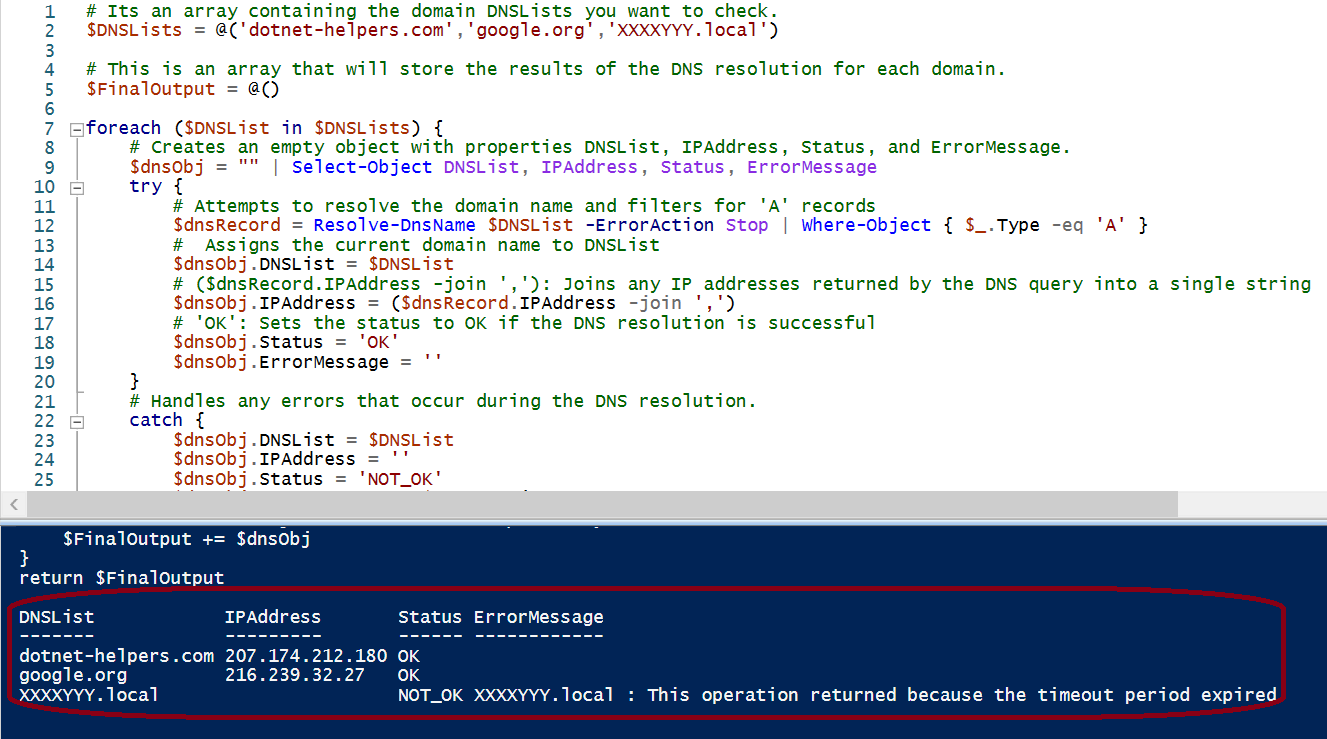

The script processes a list of domain DNSLists and retrieves their resolution status, IP addresses, and any associated errors, providing a comprehensive overview in a single execution.

If you’re managing web or mail servers, you know how heavily these servers rely DNS records. Error/Missing DNS records can cause all sorts of problems, including users not being able to find your website or the non-delivery of emails. It is a good thing that the PowerShell Resolve-DnsDNSList cmdlet exists, and with it, monitoring DNS records can be automated through scripting.

This script is designed to check the DNS resolution status for a list of domain DNSLists against a set of DNS servers, and it returns detailed results including the IP address, status, and any error messages.

When to Use This Script

You might use this script in several scenarios:

Monitoring and Troubleshooting DNS Issues: If you’re managing multiple domains and want to verify that they resolve correctly across different DNS servers, this script provides a quick way to gather and assess that information.

Validation of DNS Configurations: When you’re updating DNS records (e.g., for a new website, email configuration, or any other service), you can use this script to confirm that the records have propagated properly and are resolving as expected.

Security and Compliance Checks: Ensuring that all your domains are resolving correctly can be a part of your security checks to avoid phishing or man-in-the-middle attacks due to incorrect or hijacked DNS records.

Network Operations: In a larger network or data center, you might want to regularly check the resolution of internal or external domains to ensure consistent access to critical services.

Explanation for PowerShell DNS record monitoring Script

Here’s a breakdown of how the script works:

# Its an array containing the domain DNSLists you want to check.

$DNSLists = @('dotnet-helpers.com','google.org','XXXXYYY.local')

# This is an array that will store the results of the DNS resolution for each domain.

$FinalOutput = @()

foreach ($DNSList in $DNSLists) {

# Creates an empty object with properties DNSList, IPAddress, Status, and ErrorMessage.

$dnsObj = "" | Select-Object DNSList, IPAddress, Status, ErrorMessage

try {

# Attempts to resolve the domain name and filters for 'A' records

$dnsRecord = Resolve-DnsName $DNSList -ErrorAction Stop | Where-Object { $_.Type -eq 'A' }

# Assigns the current domain name to DNSList

$dnsObj.DNSList = $DNSList

# ($dnsRecord.IPAddress -join ','): Joins any IP addresses returned by the DNS query into a single string

$dnsObj.IPAddress = ($dnsRecord.IPAddress -join ',')

# 'OK': Sets the status to OK if the DNS resolution is successful

$dnsObj.Status = 'OK'

$dnsObj.ErrorMessage = ''

}

# Handles any errors that occur during the DNS resolution.

catch {

$dnsObj.DNSList = $DNSList

$dnsObj.IPAddress = ''

$dnsObj.Status = 'NOT_OK'

$dnsObj.ErrorMessage = $_.Exception.Message

}

# Adds the result object to the $FinalOutput array.

$FinalOutput += $dnsObj

}

return $FinalOutput