Kubernetes provides a robust mechanism for managing application deployments, ensuring high availability and smooth rollouts. The kubectl rollout status command is essential for monitoring deployment progress, while various methods exist for refreshing pods to apply updates or troubleshoot issues. In this blog, we’ll explore how to check the rollout status of a deployment, why rollouts are required, when kubectl rollout restart is necessary, and different ways to refresh pods in a Kubernetes cluster. In this article, we will discuss on how to Restart Pod in Kubernetes in detail.

Contents

Introduction:

In this blog post, we’ll explore three different methods to restart a Pod in Kubernetes. It’s important to note that in Kubernetes, “restarting a pod” doesn’t happen in the traditional sense, like restarting a service or a server. When we say a Pod is “restarted,” it usually means a Pod is deleted, and a new one is created to replace it. The new Pod runs the same container(s) as the one that was deleted.

When to Use kubectl rollout restart

The kubectl rollout restart command is particularly useful in the following cases:

- After a ConfigMap or Secret Update: If a pod depends on a ConfigMap or Secret and the values change, the pods won’t restart automatically. Running a rollout restart ensures they pick up the new configuration.

- When a Deployment Becomes Unstable: If a deployment is experiencing intermittent failures or connectivity issues, restarting can help resolve problems.

- To Clear Stale Connections: When applications hold persistent connections to databases or APIs, a restart can help clear old connections and establish new ones.

- For Application Performance Issues: If the application is behaving unexpectedly or consuming excessive resources, restarting the pods can help reset its state.

During Planned Maintenance or Upgrades: Ensuring all pods restart as part of a routine update helps maintain consistency across the deployment.

Sample Deployment created for testing:

The spec field of the Pod template contains the configuration for the containers running inside the Pod. The restartPolicy field is one of the configuration options available in the spec field. It allows you to control how the Pods hosting the containers are restarted in case of failure. Here’s an example of a Deployment configuration file with a restartPolicy field added to the Pod spec:

You can set the restartPolicy field to one of the following three values:

Always: Always restart the Pod when it terminates.OnFailure: Restart the Pod only when it terminates with failure.Never: Never restart the Pod after it terminates.

If you don’t explicitly specify the restartPolicy field in a Deployment configuration file (as shown in below YAML), Kubernetes sets the restartPolicy to Always by default.

In this file, we have defined a Deployment named demo-deployment that manages a single Pod. The Pod has one container running the alpine:3.15 image.

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo-deployment

spec:

replicas: 1

selector:

matchLabels:

app: alpine-demo

template:

metadata:

labels:

app: alpine-demo

spec:

restartPolicy: Always

containers:

- name: alpine-container

image: alpine:3.15

command: ["/bin/sh","-c"]

args: ["echo Hello World! && sleep infinity"]

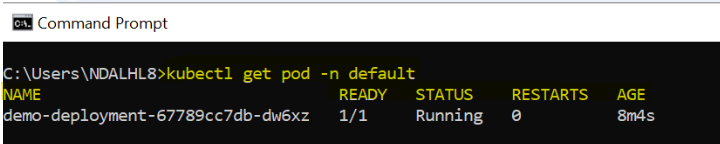

Look for the Pod with a name starting with demo-deployment and ensure that it’s in the Running state. Note that Kubernetes creates unique Pod names by adding unique characters to the Deployment name. Hence, your Pod name will be different from as shown below.

Restart Kubernetes Pod

In this section, we’ll explore three methods you can use to restart a Kubernetes Pod.

Method 1: Deleting the Pod

One of the easiest methods to restart a running Pod is to simply delete it. Run the following command to see the Pod restart in action:

#Syntax kubectl delete pod <POD-NAME> #Example Delete pod kubectl delete pod demo-deployment-67789cc7db-dw6xz -n default #To get the status of the deletion kubectl get pod -n default

After running the command above, you will receive a confirmation that the Pod has been deleted, as shown in the output below: The job of a Deployment is to ensure that the specified Pod replicas is running at all times. Therefore, after deleting the Pod, Kubernetes will automatically create a new Pod to replace the deleted one.

Method 2: Using the “kubectl rollout restart” command

You can restart a Pod using the kubectl rollout restart command without making any modifications to the Deployment configuration. To see the Pod restart in action, run the following command:

#Syntax kubectl rollout restart deployment/<Deployment-Name> #Example kubectl rollout restart deployment/demo-deployment

After running the command, you’ll receive an output similar to the following:

As you can see, the Deployment has been restarted. Next, let’s list the Pods in our system by running the kubectl get pod command:

As you can see in the output above, the Pod rollout process is in progress. If you run the kubectl get pods command again, you’ll see only the new Pod in a Running state, as shown above:

Any Downtime during Restart Kubernetes Pod?

The Deployment resource in Kubernetes has a default rolling update strategy, which allows for restarting Pods without causing downtime. Here’s how it works: Kubernetes gradually replaces the old Pods with the new version, minimizing the impact on users and ensuring the system remains available throughout the update process.

To restart a Pod without downtime, you can choose between two methods which discussed above using a Deployment or using the kubectl rollout restart command. Note that manually deleting a Pod (Method 1) to restart it won’t work effectively because there might be a brief period of downtime. When you manually delete a Pod in a Deployment, the old Pod is immediately removed, but the new Pod takes some time to start up.

Rolling update strategy

You can confirm that Kubernetes uses a rolling update strategy by fetching the Deployment details using the following command:

#Syntax kubectl describe deployment/<Deployment-Name> #Example kubectl describe deployment/demo-deployment

After running the command above, you’ll see like below snap

Notice the highlighted section in the output above. The RollingUpdateStrategy field has a default value of 25% max unavailable, 25% max surge. 25% max unavailable means that during a rolling update, 25% of the total number of Pods can be unavailable. And 25% max surge means that the total number of Pods can temporarily exceed the desired count by up to 25% to ensure that the application is available as old Pods are brought down. This can be adjust based on our requirement of the application traffic.

Conclusion

Kubernetes provides multiple methods to restart Pods, ensuring seamless application updates and issue resolution. The best approach depends on the use case:

- For minimal disruption and rolling updates, kubectl rollout restart deployment/<Deployment-Name> is the recommended method. It triggers a controlled restart of Pods without causing downtime.

- For troubleshooting individual Pods, manually deleting a Pod (kubectl delete pod <POD-NAME>) allows Kubernetes to recreate it automatically. However, this approach may introduce brief downtime.

- For configuration updates, restarting Pods after modifying a ConfigMap or Secret ensures that new configurations take effect without redeploying the entire application.

Ultimately, using the rolling update strategy provided by Kubernetes ensures high availability, reducing service disruptions while refreshing Pods efficiently.