Introduction

In the fast-paced world of cloud-native applications, Azure Kubernetes Service (AKS) has become a go-to platform for DevOps teams across the world. However, managing and troubleshooting Kubernetes clusters can be challenging, especially when dealing with issues in pods, deployments, or containers. Thankfully, Kubernetes provides a powerful command-line tool called kubectl that allows you to interact with your AKS cluster. This article will walk you through the most essential kubectl commands to help DevOps engineers and cloud professionals effectively Kubernetes Troubleshooting issues in their AKS clusters.

When to Use These Commands

You should use these kubectl commands when:

- Pods are in a CrashLoopBackOff or Error state.

- A deployment is not scaling as expected.

- Containers fail to start or exhibit unexpected behavior.

- Networking issues prevent services from communicating.

- You need to inspect logs to understand what’s happening inside a container.

- You want to check the status and events related to a pod or deployment.

- You need to execute commands inside a running container for debugging purposes.

Essential Kubectl Commands for Troubleshooting in AKS

1. Get the Status of Pods, Deployments, and Services

This command lists all the pods, deployments, or services in a specific namespace. It helps you quickly identify if any pods are in a CrashLoopBackOff, Pending, or Error state.

kubectl get pods kubectl get deployments kubectl get services #Example: kubectl get pods -n <namespace>

2. Describe a Pod or Deployment

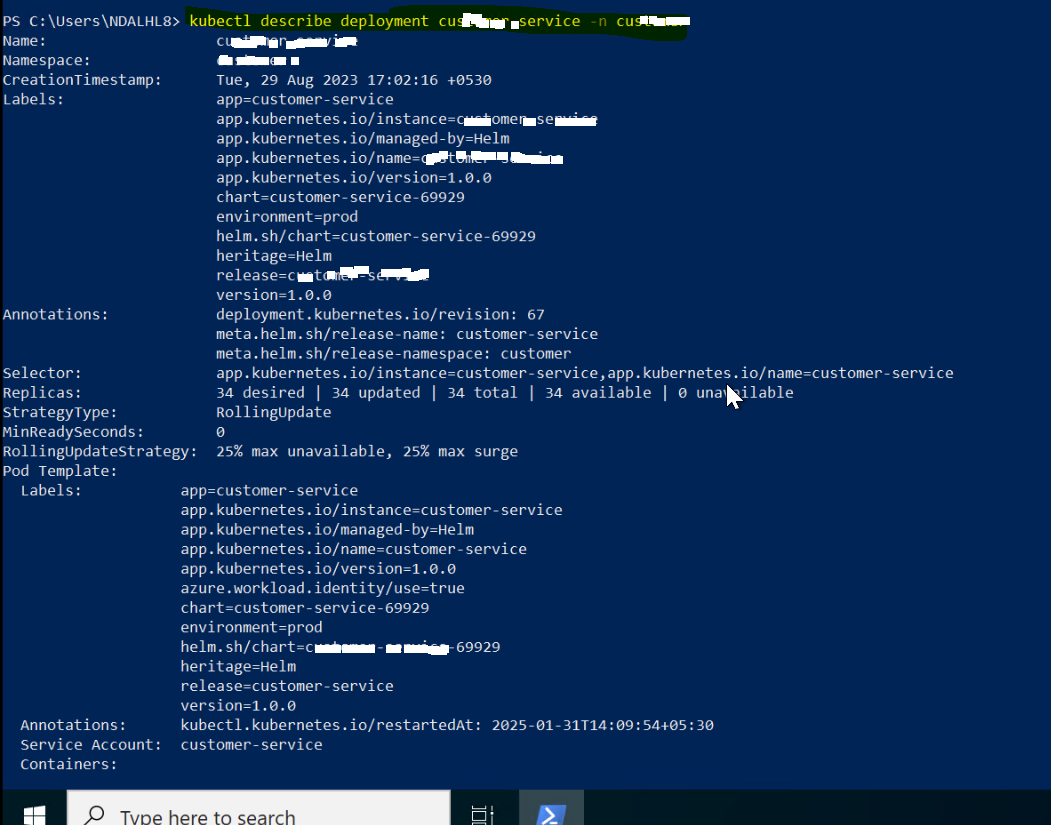

The describe command provides detailed information about a specific pod or deployment, including events, configuration, and status. This is useful for understanding why a pod might be failing or why a deployment isn’t scaling correctly.

The kubectl describe command provides detailed information about various Kubernetes resources, such as pods, nodes, and deployments. By running kubectl describe <resource> <resource-name> -n <namespace>, you can access a wealth of data, including events, conditions, and configuration details, helping you pinpoint the root cause of problems.

kubectl describe pod kubectl describe deployment #Example: kubectl describe pod my-pod -n <namespace> kubectl describe deployment customer-service -n customer

The output will contain detailed information about the specified pod, including its metadata, container information, conditions, and events. This information can be invaluable for troubleshooting issues with the pod, such as initialization problems, readiness issues, or events related to its lifecycle.

3. View Pod Logs

This command fetches the logs from a specific pod, which is crucial for debugging issues within the container. You can also use the -f flag to follow the logs in real-time.

When application-level issues arise, examining pod logs is crucial. Use kubectl logs <pod-name> -n <namespace> to view the logs of a specific pod in a given namespace. This command is invaluable for identifying errors, exceptions, or issues within your application code.

kubectl logs #Example: kubectl logs my-pod -n

4. Execute Commands in a Running Container

This command allows you to open a shell inside a running container. It’s useful for running diagnostic commands or inspecting the file system directly within the container.

#Linux pod: kubectl exec -it -- /bin/bash #Window pod: kubectl exec -it -- powershell #Example: kubectl exec -it my-pod -n -- /bin/bash

5. Check Event Logs for a Namespace

This command lists all events in the cluster, sorted by creation time. Events can provide insights into what’s happening in your cluster, such as why a pod was evicted or why a deployment failed.

kubectl get events --sort-by=.metadata.creationTimestamp #Example: kubectl get events -n <namespace> --sort-by=.metadata.creationTimestamp

Real-World Scenario: Troubleshooting Pod Initialization

Suppose you encounter an issue where pods are not initializing correctly. You can use kubectl get events –all-namespaces to identify events related to pod initialization failures, helping you pinpoint the root cause.

6. View Node Resource Utilization

This command shows the CPU and memory usage of pods or nodes. It’s useful for identifying resource bottlenecks that might be causing issues in your AKS cluster.

kubectl top pod kubectl top node #Example: kubectl top pod -n <namespace>

Conclusion

Troubleshooting issues in Azure Kubernetes Service (AKS) can be challenging, but with the right kubectl commands, you can quickly identify and resolve problems in your pods, deployments, and containers. By using commands like kubectl get, kubectl describe, kubectl logs, and kubectl exec, you can gain deep insights into the state of your AKS cluster and take corrective actions. Whether you’re dealing with a crashing pod, a misbehaving deployment, or a container that’s not responding, these commands are essential tools in your Kubernetes troubleshooting toolkit.

By mastering these essential kubectl commands, you can minimize downtime, improve operational efficiency, and keep your AKS workloads running smoothly.